Updates: The trained model + instructions to use can now be downloaded from HF here.

In this post, I summarize how I made use of Huggingface’s transformer library to re-solve an NLP problem related to the Vietnamese language.

The problem

After learning about Hidden Markov models about 10+ years ago, I decided to apply it to building a small, but practical, toy that can auto insert accent marks for Vietnamese language.

In a nutshell, Vietnamese has some letters that have additional marks put on them. For ex, in addition to the letter ‘a’, the Vi alphabet also contains these “marked versions”: ă, â.

And for each of these 3 versions (a, ă, â), we can then put the 5 tones on them. An example for ‘ă’ will be: ắ (acute), ằ (grave), ẳ (hook), ẵ (tilde), ặ (dot).

(More info about Vietnamese alphabet can be found here. Also, if you know about French, this phenomenon is similar, just that in Vietnamese the accents are used more frequently)

As an example, from the original letter “a”, we’d have a total of 3 (“marked versions”) x 6 (5 tones + no-tone) = 18 accented forms.

(For our usage, inserting “accent marks” refer to both the process of producing the marked versions and putting tones to produce the final correct Vietnamese words.)

The problem can now be stated as: given a Vietnamese sentence / paragraph that has been stripped away the accent marks, recover the original sentence.

This is a practical problem as some people (and Vietnamese learners) want to type the non-accent version and have the software auto-insert the accent marks for them, as typing w/o accents is faster, and esp. helpful if the Vietnamese typing software (for typing the accented versions) hasn’t been installed on the computer.

The original HMM solution

The original solution, which has been running on VietnameseAccent.com, is modeled as finding the hidden labels of the no-accent words, assuming the Markov property (= using bigrams).

The Viterbi algorithm is then used to find the optimal paths among all possible ones.

When this was developed, I didn’t formally pin its accuracy down to a number, although I did find it “quite accurate” through manual testing.

But to help compare this version with the Transformer version I plan to build, this time I decided to evaluate its accuracy using a test set of about 15k sentences.

First, the accuracy of accents inserting is calculated for each sentence, and then mean and median are taken over these accuracies. For each sentence, accuracy is calculated as the percentage of words that are accented correctly, where words are split using spaces and removing puncs

For all of the experiments below, this is the way I’d refer to accuracies.

The current HMM’s accuracy is pretty good: with mean = 0.91, median = 0.94

The 2021 Transformer version

This problem of auto-inserting accent marks fits nicely into a token classification problem (similar to, for example, the classical problems of POS tagging and sentiment analysis in English).

In particular, each no-accent word is a “token” and all the possible accented versions of this word can be seen as its “labels”. The task is to select the correct label of this word in a given sentence context.

Using Huggingface’s Transformer lib, we can then make use of a BERT-like pretrained model on Vietnamese language and fine-tune it for our purpose.

The fine-tuning process is the standard one provided by the library so we’d mention it later.

For now, let’s go through the training results b/c this is where many interesting things were learned.

Fine-tuning Round 1

| Training size | Validation | Test |

| ~15k sents | ~ 15k sents | ~ 15k sents |

(The available training data were much larger, but at that point I wasn’t aware that Google Colab supports a High-ram version, so 15k sents were all that it could take before it came crashing.)

Let’s keep the default values for all parameters and start training. After 20 mins of training, below are the results produced by Seqeval.

| Epoch | Training Loss | Validation Loss | Precision | Recall | F1 | Accuracy |

| 1 | 2.134300 | 0.735763 | 0.697687 | 0.701565 | 0.699621 | 0.773819 |

| 2 | 0.717500 | 0.580149 | 0.759825 | 0.763575 | 0.761695 | 0.821985 |

| 3 | 0.568200 | 0.528949 | 0.782944 | 0.786753 | 0.784844 | 0.840678 |

And here’s the result on the test data set:

| overall_precision | overall_recall | overall_f1 | overall_accuracy |

| 0.784707 | 0.788418 | 0.786558 | 0.842166 |

The performance on test set was on par with the validation result so everything seems all right.

The only thing that wasn’t right is that this accuracy is still a lot lower than the HMM version (91% average accuracy)! I then proceeded to evaluate this model using the same way I evaluated the original model.

When measured using the same method as for the original version, this is the result: mean = 0.78, median = 0.8

Oops. So it’s not 84%, but only 80%. (A question for readers: Why this (small) difference?)

Fine-tuning Round 2

In Colab high-ram mode, I could manage to get the training data up to 16x the previous round: so it’s now about 240K training sentences. The validation & test data sets remained the same.

The training time now took about 4h (instead of 20 mins for the prev round).

Below are the results on the validation and test sets:

| Epoch | Training Loss | Validation Loss | Precision | Recall | F1 | Accuracy |

| 1 | 0.098000 | 0.163699 | 0.942804 | 0.944179 | 0.943491 | 0.958879 |

| 2 | 0.104000 | 0.117573 | 0.955958 | 0.956947 | 0.956452 | 0.968497 |

| 3 | 0.071500 | 0.109042 | 0.961098 | 0.962062 | 0.961580 | 0.972209 |

And here’s the result on the test data set:

| overall_precision | overall_recall | overall_f1 | overall_accuracy |

| 0.961755 | 0.962589 | 0.962172 | 0.972720 |

Wow, wow that’s a 10% increased in accuracy from the previous round: from 84.2% to now 97.2%, beating the HMM model’s avg accuracy of 91%!

This result was rather unbelievable, given the rather small training data set, so I went ahead to confirm its performance using the same metric for HMM. And now I believe it: mean = 0.95, median = 1.0

Unbelievably, its median accuracy is 100% (vs HMM 94%) and the avg is 95% (vs HMM 91%)

This result has once again confirmed the superior power of deep learning models over traditional statistical methods, if sufficient training data are available. In theory, it’s not too hard to try to persuade oneself of this, but some concrete practical results are necessary to confirm it.

One last question that I wanted to ask was: can it do even better? What will happen if we keep training these models with more and more data?

Pushing more data

Below are 2 more training rounds with more data. Evaluated using the same method as for HMM:

| Training data size | Mean Accuracy (test set) |

Median accuracy (test set) |

| 240K sentences (Round 2 above) |

0.95 | 1.0 |

| 960K sents | 0.97 | 1.0 |

| 1200K sents | 0.97 | 1.0 |

The performance did increase with more training data, but only marginally and 97% is about the best that it could get with more data.

Better results may still be possible, but probably not with just adding more data.

See a new error analysis section below where the 3% error rate is discussed.

Some comparisons: HMM vs Transformer

Training data size

As mentioned in a table above, the smallest training data set that produced the model with 97% accuracy had about 1 mil sentences. On average, there’s 10 words per sent, so in total the training set has 10M tokens.

When I reviewed the data set that was used to produce bigram stats for the HMM model, it had ~ 13M tokens.

It’s interesting to see that in this case, the transformer model could out-perform the HMM model on the same training data set size. [1]

Performance over long texts

To see if these models extend well to longer texts (texts with >= 200 tokens), I’ve reevaluated them over a test set of the same size, but with longer texts.

Over the longer text, the Transformer model is surprisingly resilient, with its performance drops only slightly:

| Test data desc | Transformer’s Mean accuracy |

Transformer’s Median accuracy |

| Random lengths (with the avg being 10 word tokens per text) |

0.97 | 1.0 |

| Long texts (>= 200 word tokens per text) |

0.93 | 0.94 |

In contrast, the HMM model suffered quite heavily, having its performance dropped to below 30%.

After some digging, the cause was found to be due to missing bigram statistics when run over bigrams the model has never seen before. Longer (and esp. mixed-language) texts exacerbated this problem.

Longer sequences are challenging for HMM decoding, even when smoothing has been applied. But this doesn’t seem to be of any significant challenge to transformer-based models.

Inference speed

This is where the HMM model shines: from my experiments, its inference speed is 10 – 30 times faster than the transformer model.

On a text with ~10 word tokens, the HMM model needs only 0.01s to produce a result, compared to 0.1s – 0.3s of the transfomer model.

The slower speed of neural network models in general is quite understandable, simply due to its massive amount of nodes.

Error analysis of the finetuned model

The best model above has a mean accuracy of 0.97 and a median accuracy of 1.

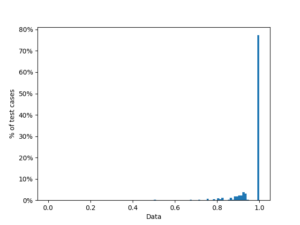

The following histogram provides the big picture (100 bins, each corresponding to 1%)

Errors causes

Over 157191 tokens of the 14825 sentences in the test data, 4381 tokens was assigned a wrong label by the system. This makes a ~3% error rate.

There are 2 classes of errors. The first kind happened due to an issue in the training data for words starting with “đ”, such as “điều”, “đã”, etc. This class of error contributes 1/3 of all error cases.

More specifically, I’ve employed some linguistic rules to reduce the size of the labels set, currently ~ 500+ labels, and those rules mishandled a subset of words starting with “đ”, causing these words to be wrongly assigned the “no change” tag in the training data. As the training data for these cases were erroneous, the inference was therefore wrong as well. The total number of such cases is estimated at 1550, constituting 35% of all error cases. If these cases are handled properly, we can expect a better accuracy for words starting with “đ”.

The other cases where the system couldn’t handle well (the remaining 2% error rate) fall into 3 main categories:

- Abbreviations

- Exs: ‘đh-cđ’, ‘đhđcđ’, ‘hđnd’, ‘gd&đt’, ‘đkvđ’, ‘lđlđvn’, etc.

- Tokenization issues:

- Exs: ‘đồng/lượng’, ‘đồng/lít’, etc.

- Cases that are ambiguous, hard even for humans. This category accounts for the majority of the remaining error cases (after excluding the bug-related case above).

Fix the issue with “đ” and handling the other error cases are left for future work.

A few concrete example outputs

1st line: Input to system (no accent)

2nd line: gold data

subsequent lines: words that are wrongly accented by the program

Accuracy < 0.7

Kinh ap trong biet do duong huyet.

Kính áp tròng biết đo đường huyết.

Wrong: trong vs tròng (correct)

Wrong: biệt vs biết (correct)

Wrong: do vs đo (correct) <- issue with “đ”

Correct = 4/7 -> Acc=0.57

Bat giam ba ‘trum’ de.

Bắt giam ba ‘trùm’ đề.

Wrong: bà vs ba (correct)

Wrong: dê vs đề (correct)

Correct = 3/5 -> Acc=0.6

Ke chuyen cuop… banh sung bo.

Kẻ chuyên cướp… bánh sừng bò.

Wrong: kể vs Kẻ (correct)

Wrong: súng vs sừng (correct)

Correct = 4/6 -> Acc=0.67

0.7 <= accuracy < 0.8

‘Ky su Syria cua VTV24 nen phat vao dip… 21.6’.

‘Ký sự Syria của VTV24 nên phát vào dịp… 21.6’.

Wrong: kỹ vs Ký (correct)

Wrong: sư vs sự (correct)

Wrong: phạt vs phát (correct)

Correct = 7/10 -> Acc=0.7

Tien ve Sai Gon trong ngay 30/4.

Tiến về Sài Gòn trong ngày 30/4.

Wrong: tiền vs Tiến (correct)

Wrong: vệ vs về (correct)

Correct = 5/7 -> Acc=0.71

Cach xu ly bi mat bo hop dong bao hiem nhan tho.

Cách xử lý bị mất bộ hợp đồng bảo hiểm nhân thọ.

Wrong: bí vs bị (correct)

Wrong: mật vs mất (correct)

Wrong: bỏ vs bộ (correct)

Correct = 9/12 -> Acc=0.75

Nam kim cham mang nhan Viet co thuc ‘Viet’?.

Nấm kim châm mang nhãn Việt có thực ‘Việt’?.

Wrong: nam vs Nấm (correct)

Wrong: nhân vs nhãn (correct)

Correct = 7/9 -> Acc=0.78

0.8 <= accuracy < 0.9

Chieu tang trong ga lon kinh dien cua tieu thuong.

Chiêu tăng trọng gà lợn kinh điển của tiểu thương.

Wrong: trong vs trọng (correct)

Wrong: lớn vs lợn (correct)

Correct = 8/10 -> Acc=0.8

An tuong nhung kiet tac nghe thuat khac da co dai.

Ấn tượng những kiệt tác nghệ thuật khắc đá cổ đại.

Wrong: khác vs khắc (correct)

Wrong: da vs đá (correct)

Correct = 9/11 -> Acc=0.82

Tuong lai quan he Viet-My: Hay nhin vao 20 nam toi va xa hon nua.

Tương lai quan hệ Việt-Mỹ: Hãy nhìn vào 20 năm tới và xa hơn nữa.

Wrong: viet-my vs Việt-Mỹ (correct)

Wrong: tối vs tới (correct)

Correct = 13/15 -> Acc=0.87

0.9 <= accuracy < 1

Dong phi bao tri duong bo: Anh ngay that chiu thiet.

Đóng phí bảo trì đường bộ: Anh ngay thật chịu thiệt.

Wrong: ngày vs ngay (correct)

Correct = 10/11 -> Acc=0.91

Bat 14 doi tuong dang sat phat tai song tai xiu.

Bắt 14 đối tượng đang sát phạt tại sòng tài xỉu.

Wrong: sông vs sòng (correct)

Correct = 10/11 -> Acc=0.91

Nu Than Tam Quoc – San pham da nen co phong cach hap dan.

Nữ Thần Tam Quốc – Sản phẩm đa nền có phong cách hấp dẫn.

Wrong: nên vs nền (correct)

Correct = 12/13 -> Acc=0.92

Nguoi trung tuyen Tong cuc truong:”Toi dam lam, dam chiu trach nhiem”.

Người trúng tuyển Tổng cục trưởng:”Tôi dám làm, dám chịu trách nhiệm”.

Wrong: truong:”toi vs trưởng:”Tôi (correct) <- Tokenization issue

Correct = 11/12 -> Acc=0.92

Con nguoi lai tau tu nan: Som mai di hoc, ai se hon con?.

Con người lái tàu tử nạn: Sớm mai đi học, ai sẽ hôn con?.

Wrong: hơn vs hôn (correct)

Correct = 13/14 -> Acc=0.93

Diem thi DH: Phong chay chua chay, Da Lat, GTVT Ha Noi, SPKT TP.HCM, Tien Giang….

Điểm thi ĐH: Phòng cháy chữa cháy, Đà Lạt, GTVT Hà Nội, SPKT TP.HCM, Tiền Giang….

Wrong: da vs Đà (correct)

Correct = 15/16 -> Acc=0.94

Ke hoach trien khai chuong trinh:”Han che su dung tui nylon vi moi truong” tren dia ban Thanh pho nam 2011.

Kế hoạch triển khai chương trình:”Hạn chế sử dụng túi nylon vì môi trường” trên địa bàn Thành phố năm 2011.

Wrong: trinh:”han vs trình:”Hạn (correct) <- Tokenization issue

Correct = 20/21 -> Acc=0.95

Acknowledgements

I first learned about Huggingface’s transformer library via a recommendation by Cong Duy Vu Hoang.

After playing around with the lib, I was looking for an application that I can experiment with the model fine-tuning process and have a first-hand experience of the power of transformer-based models (Seeing is believing).

The GPUs part was solved by Google Colab (and its PRO version to run longer trainings).

One day, I stumbled upon this paper where it used transformer to do grammar corrections via tagging. The paper made me think of this accent marking problem that I’d done before and I realized I could also formulate this problem as a tagging (token classification) problem as well.

Luckily, for this problem, the training data necessary for this problem need no manual annotation: the labels are already in the natural texts. It makes for a perfect learning toy.

The pre-trained Vietnamese model I used was the XLM-Roberta Large [2] and the training data used for finetuning were articles’ titles from Binhvq News corpus .

Notes:

[1] I just realized that this remark overlooked the fact that this transformer model was finetuned from XLM-Roberta Large, which had been pretrained on billions of words. The HMM model wasn’t pretrained.

[2] In addition to the multilingual Roberta model, there’s another good model pretrained on only Vietnamese text: PhoBERT. The main reason I preferred the XLM model over this was due to PhoBERT’s tokenization scheme. For this problem of inserting accent marks, treating the phrase “sinh viên” (student) as 2 separate tokens is more suitable than treating it as a single token. Subsequently, the result on XLM model was also very good, so I didn’t experiment with finetuning PhoBERT.

Photo credit: Photo by Karolina Nichitin from Unsplash

To receive auto email updates about new posts, please register using this form: